Видео с ютуба Bitnet Transformer Explained

Bitnet Transformer Explained: Future Of AI

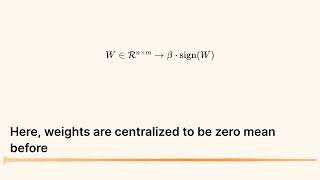

BitNet: Scaling 1-bit Transformers for Large Language Models

1-Bit LLM: The Most Efficient LLM Possible?

The myth of 1-bit LLMs | Quantization-Aware Training

BitNet: Energy Efficient 1 bit Transformers for Large Language Models!

The Era of 1-bit LLMs by Microsoft | AI Paper Explained

BitNet b1.58 2B4T ПРОРЫВ В МИРЕ ИСКУССТВЕННОГО ИНТЕЛЛЕКТА от Microsoft. Случайность?

Microsoft Bitnet B1.58

Я получил самую маленькую (и глупую) степень магистра права

BitNet b1 58 GPU Test

What is vLLM? Efficient AI Inference for Large Language Models

Getting Started With Hugging Face in 15 Minutes | Transformers, Pipeline, Tokenizer, Models

BitNet b1.58: The era of 1-bit LLMs

Quantizing LLMs - How & Why (8-Bit, 4-Bit, GGUF & More)

The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits - Paper Explained

Exploring BitNet 1-bit LLM: Conceptual Introduction and AI Research Review

ArxivDailyShow (October 18, 2023)